Hyperconverged Infrastructure Pros and Cons

What are the pros and cons of Hyperconverged Infrastructure (HCI)? While a straightforward question, the answer is less so. There are many HCI vendors on the market. Non-HCI infrastructure choices have also caught up to HCI for some use cases, further blurring the lines. In attempting to define the pros and cons of HCI, a number of grey areas will be explored, though some characteristics of HCI remain universal.

HCI offerings break down into two broad categories: those which ship as appliances, and those that do not. This analysis will consider HCI solutions not sold by the vendor as an appliance, but which are only supported by that vendor if the HCI software is installed on reference architectures provided by partners, as being equivalent to appliances.

This is because the reference architecture approach is a way for small startups to offload tier-1 and tier-2 support onto partners, who accept the support burden in exchange for a bigger share of the profits. The basic premise of HCI appliances – supporting a small list of configurations in order to drive the costs of support down – still applies to the reference architecture approach.

The real reason that HCI was invented is that traditional centralized storage of the 2000s was both expensive and miserable to manage.

There are very few true “install anywhere” Do-It-Yourself (DIY) HCI offerings remaining. Most have either gone the pseudo-appliance route of only supporting reference architectures, or operate in between the appliance approach and the DIY approach. This latter group of vendors typically uses a product compatibility list that offers more freedom than reference architectures, but is still quite restrictive.

For the purposes of this analysis, we’ll assume that all HCI solutions are either sold by the HCI vendor as an appliance, or use the reference architecture approach. True DIY HCI will be ignored: combined, sales of HCI other than appliances or reference architectures occupy a rounding error’s worth of market share.

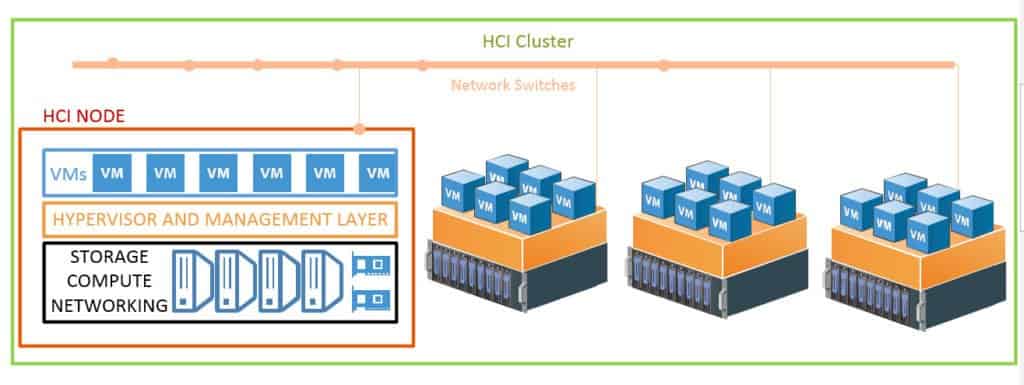

To create a list of pros and cons, one must have something to compare HCI to. Because HCI is typically used as an alternative to traditional IT infrastructure, this will be the basis of the comparison. A traditional IT infrastructure is defined as one where storage, compute and networking are separate infrastructure components, each with their own management interfaces, and often sold by separate vendors.

This analysis assumes that all solutions – whether HCI or traditional IT – meet basic standards for resiliency and suitability. This means that traditional marketing check-box items such as the ability to non-disruptively handle drive failures, and workload high availability, will not be discussed. They are assumed to be universal in any serious IT infrastructure solution sold in 2018.

HCI’s Origin Story

It is impossible to discuss the pros of HCI without discussing the reason HCI came to be. If we ignore all the attempts by marketing teams to rewrite history, the real reason that HCI was invented is that traditional centralized storage of the 2000s was both expensive and miserable to manage.

HCI overcomes the limitations of both SANs and NASes by offering the best of both worlds: it’s as easy to use as a NAS, but has the resiliency against failure of a SAN.

“Difficult to manage” was broken into two areas of complaint: ongoing administration of storage, and upgrades/migration of storage. The issues relating to the ongoing administration of centralized storage largely focused around the necessity of managing Logical Unit Numbers (LUNs).

LUNs are used to carve up a large Server Area Network (SAN) array so that it can serve multiple workloads. Without going into details, managing LUNs was so much of a pain it often required a dedicated storage administrator.

An alternative to LUNs existed in the form of Network Attached Storage (NAS) filers. NASes didn’t require administrators to fuss around with LUNs; they could put multiple workloads on a single share. Unfortunately, NASes didn’t – and for the most part still don’t – support multipath. This means that if the network link to the NAS goes down, there’s no way to non-disruptively switch to a backup network connection.

HCI overcomes the limitations of both SANs and NASes by offering the best of both worlds: it’s as easy to use as a NAS, but has the resiliency against failure of a SAN.

The other major pain point was upgrades and/or data migration. This pain point was such a big deal that somewhere around 2010 there was an explosion of different approaches to storage. Hundreds of different vendors attempted to redefine how the industry uses storage, and HCI vendors were a big part of that.

Upgrading or replacing a SAN or NAS has traditionally been referred to as a “forklift upgrade”. This terminology comes from the fact that most centralized storage units are so heavy that it would be a violation of workplace safety regulations for humans to try to lift one onto a rack.

Forklift upgrades require data migration: data must be moved from the old storage unit to the new one. This often did (and in many cases still does) require turning all associated workloads off to perform the migration. This is a major disruption, and again an area where HCI shines.

HCI was specifically designed to be capable of non-disruptive upgrades. The idea is that new nodes are added to a cluster on an as-needed basis. Old nodes can be retired if they no longer suit the cluster’s needs, while newer, more powerful nodes can be added. Hypothetically, one could continue this indefinitely, never needing to move or migrate a workload as part of the upgrade process.

Additional HCI Benefits

While HCI was originally designed to solve those two primary pain points, a number of benefits emerged as a result. In eliminating the need for SANs, HCI also eliminated the need for a dedicated network infrastructure to support that SAN. Many SANs – especially in the early 2000s – required dedicated networks to operate. HCI solutions almost always use a standard Ethernet network.

Because HCI was designed to eliminate the forklift upgrade headache, scaling HCI clusters is also easy. Additional storage or compute capacity can be added as needed, just by adding additional nodes, or by swapping out less powerful nodes for more powerful ones.

HCI’s integration of storage, compute, and (for most vendors) networking into a single product means that most HCI solutions offer a “single pane of glass” approach to infrastructure management. Most HCI solutions offer a unified management interface that covers storage, compute and networking, eliminating the need for administrators with specializations in these areas.

The integration of storage, compute and networking into a single product also create a “one throat to choke” support experience. This contrasts starkly with the “circle of blame” that traditional IT often gets trapped in. When there are multiple vendors involved in providing infrastructure components, then individual vendors often point the finger at one another instead of actually working to solve the problem. HCI solutions are usually provided by a single vendor, or at least have a very well-defined support chain, as the product is provided as an integrated whole.

Most HCI solutions offer a unified management interface that covers storage, compute and networking, eliminating the need for administrators with specializations in these areas.

The impact of multiple vendors in traditional IT extends beyond management interfaces and the support experience. Managing multiple vendors also means that organizations have to manage multiple supply chains for multiple vendors. HCI typically requires interacting with a single vendor. Traditional IT can require five vendors to accomplish the same basic tasks: storage, compute, networking, hypervisor, and monitoring.

The integration of monitoring, reporting and analytics into many HCI solutions is another benefit. Organizations are increasingly looking at IT automation, and automation begins with instrumentation.

Many – if not most – HCI solutions include a great deal of automation out of the box. The details vary from vendor to vendor, but it’s typical to find self-healing storage and networking, integrated data protection, and Application Programming Interfaces (APIs) that allow access to the entire infrastructure through a single API.

Thanks to being predominantly sold as an appliance, HCI also offers rapid setup. Most HCI solutions require only a few minutes to go from initial power on to serving workloads. Rounding out the list of HCI pros is storage flexibility: HCI nodes can be all-magnetic media, all-flash, or hybrid storage. Some HCI nodes are even emerging which offer NVMe/SATA all-flash hybrids, and which provide truly exceptional performance.

Hyperconvergence Cons

As with anything in IT, there are both benefits and compromises associated with the use of HCI. For most customers, the pros outweigh the cons, but it’s important to enter into any purchase decision with eyes open.

The biggest operational drawback for most HCI solutions is the number of network ports they consume. Most HCI solutions consume a minimum of 4 ports per node, though some HCI solutions consume up to 8 ports per node!

It’s hypothetically possible to design and use an HCI solution with a single port per node. Beware going this route, though, as it would offer zero resiliency against network failure, and wouldn’t separate the various types of traffic from one another. As such, it’s never a recommended design.

Some vendors advise the use of two network connections per node. This offers resiliency against the failure of a single network link, but combines all traffic types. Most vendors separate out management and replication traffic onto one pair of redundant links, with workload traffic isolated on its own pair of redundant links.

Those vendors recommending 8 links per node are usually recommending independent redundant network pairs for each of the following: management, replication, VM traffic internal to the on-premises data center, and VM traffic destined for the WAN. This allows VM traffic destined for the WAN to be routed through various security solutions (such as intrusion detection or data loss prevention systems) that may not be compatible with VXLANs or other network overlays used by HCI products.

Customers who report disappointment with HCI almost universally engaged HCI vendors with completely unrealistic expectations about the product’s capabilities.

The remaining major compromises regarding HCI all flow from HCI being the product of a single vendor. There are no standards for HCI: one cannot take HCI nodes from one vendor and mix them with HCI nodes from another vendor. This means that choosing to switch HCI vendors is a forklift upgrade, which was the sort of thing most customers bought into HCI to avoid.

Being at the mercy of a single vendor also means being tied to that vendor’s roadmap and partnerships. Feature requests proceed on the vendor’s timetable, while third-party support for automation, monitoring, orchestration and other ecosystem functionality usually depends on a third party choosing to support the HCI vendor’s APIs.

Third-party integration can be a serious roadblock for some HCI providers. This often has less to do with third-party support than it does with partner ecosystem management by the vendor. Updates to the APIs can break third-party support, something that can and does occur when the HCI vendor doesn’t take the time to inform third-party vendors who have built something on top of that API that changes are coming.

Vendor lock-in, API support and related issues are not unique to HCI. They exist in traditional IT, and have done so for as long as IT has been around. What’s different about HCI is that if a traditional IT vendor fumbled their ecosystem management duties, then only one part of an organization’s IT was impacted.

Losing visibility into storage for a few months because the storage vendor forgot to tell the monitoring vendor about API changes may be annoying, but IT only has to rely on a single workaround for that scenario. If an HCI vendor fumbles their ecosystem management, IT teams could lose visibility into everything below the operating system (see Figure 1). This is the natural result of collapsing so many infrastructure domains into a single product.

Customer Expectations

Stripping all the marketing off, HCI is for those who don’t want to implement their own infrastructure.

Success with HCI, like most things in IT, begins with a proper needs assessment. Customers who report disappointment with HCI almost universally engaged HCI vendors with completely unrealistic expectations about the product’s capabilities.

Stripping all the marketing off, HCI is for those who don’t want to implement their own infrastructure. Many HCI solutions have just enough of what’s needed to be considered “proper” data center infrastructure, and not much more.

Organizations looking to embrace bleeding-edge technologies will do better with traditional infrastructure than they will with HCI. A dedicated (and large) team of skilled IT experts will always be able to create a traditional IT infrastructure with more informative monitoring, more powerful automation, and encompassing orchestration.

If your goal is to be the next Netflix, where you’re pushing the boundaries such that you redefine entire disciplines within IT, don’t use HCI. If, however, you need data center IT so simple that you just take the nodes out of the box, rack them, power them on, and go, HCI is probably the right solution for you.