Ensuring Availability, Data Protection and Disaster Recovery

Even the smallest of small businesses today depend on their IT resources being available on a 24/7 basis. Even short periods of downtime can wreak havoc, impact the bottom line, and mean having to cancel going out to lunch. Maintaining an agreed-upon level of infrastructure availability is critically important. On top of that, outages or other events resulting in loss of data can be a death knell for the business. Many businesses that suffer major data loss fail to recover in the long-term and eventually make their way down the drain. Data protection is one of IT’s core services. Unfortunately, it’s also a hard service to provide at times, or at least, it was. There are now some hyperconverged infrastructure solutions that are uniquely positioned to solve, once and for all, the challenges across the entire data protection spectrum.

The Ins and Outs of Backup and Recovery

There are two primary metrics to consider when it comes to disaster recovery.

Recovery Point Objective (RPO)

If you’re using a nightly backup system, you’re implicitly adhering to a 24-hour Recovery Point Objective (RPO). You’re basically saying that losing up to 24 hours worth of data is acceptable to the business. RPO is the metric that defines how much data your organization is willing to lose in the event of a failure that has the potential to result in data loss. To reduce RPO, you need to back data up more often.

Recovery Time Objective (RTO)

RPO is critically important as it defines just how much data you’re willing to lose. Once you’ve suffered a data loss, the critical metric shifts. Now, you’re more interested in how long it takes you to recover from that failure. How long is your organization willing to be without data while you work to recover it from backup systems? This metric is often used to support such statements as, “For every minute we’re down, the company loses $X.”

The Recovery Time Objective (RTO) is the formal name for this metric and is one that companies will go to great lengths to minimize. As is the case with RPO, the closer to zero that you attempt to get to RTO — that is, the less time that you’re willing to be down — the more it costs to support.

To achieve very low RTO values, companies will often implement multi-pronged solutions, such as disaster recovery sites, fault tolerant virtual machines, clustered systems, and more.

The Data Protection and Disaster Recovery Spectrum

Let’s talk a bit about data protection as a whole. When you really look at it, data protection is a spectrum of features and services. If you assume that data protection means “ensuring that data is available when it’s needed,” the spectrum also includes high availability for individual workloads. Figure 1 provides you with a look at this spectrum.

Figure 1: The Data Protection Spectrum

RAID

Yes, RAID is a part of your availability strategy, but it’s also a big part of your data protection strategy. IT pros have been using RAID for decades. For the most part, it has proven to be very reliable and has enabled companies to deploy servers without much fear of negative consequences in the event of a hard drive or two failing. Over the years, companies have changed their default RAID levels as the business needs have changed, but the fact is that RAID remains a key component in even the most modern arrays.

The RAID level you choose is really important, but you shouldn’t have to worry about it. The solution should do it for you. That said, don’t forget that it’s pretty well-known that today’s really large hard drives have made traditional RAID systems really tough to support. When drives fail in a traditional RAID array, it can take hours or even days to fully rebuild that drive. Don’t forget this as you read on; we’ll be back to this shortly.

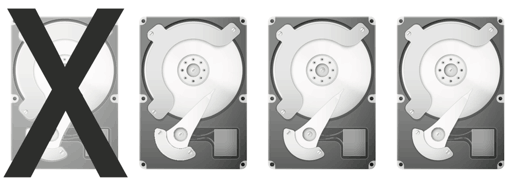

RAID is also leveraged in some hyperconverged infrastructure systems; however, with these systems, administrators are shielded from some of the complexity and configuration options that they used to work with on stand-alone storage arrays. Bear in mind that one of the tenets of hyperconverged infrastructure is simplicity. As such, you don’t have to go through a lot of effort to manage RAID in a hyperconverged system. It’s simply leveraged behind the scenes by the system itself. In Figure 2, you get a look at how RAID protects data.

Figure 2: On the data protection spectrum, RAID helps you survive the loss of a drive or two

Replication/RAIN/Disaster Recovery

RAID means you can lose a drive and still continue to operate, but what happens if you happen to lose an entire node in a hyperconverged infrastructure cluster? That’s where replication jumps in to save the day. Many hyperconverged infrastructure solutions on the market leverage replication as a method for ensuring ongoing availability and data protection in the event that something takes down a node, such as a hardware failure or an administrator accidentally pulling the wrong power cord.

This is possible because replication means “making multiple copies of data and storing them on different nodes in the cluster.” Therefore, if a node is wiped off the face of the earth, there are one or more copies of that data stored on other cluster nodes.

Two Kinds of Replication

There are two different kinds of replication to keep in mind. One is called local and the other is called remote. Local replication generally serves to maintain availability in the event of a hardware failure. Data is replicated to other nodes in the cluster in the same data center. Remote replication is leveraged in more robust disaster recovery scenarios and enables organizations to withstand the loss of an entire site.

In some hyperconverged infrastructure solutions, like those shown in Figure 3, you can configure what is known as the replication factor (RF). The replication factor is just a fancy way of telling the system how many copies of your data you’d like to have. For example, if you specify a replication factor of 3 (RF3), there will be 3 copies of your data created and stored across disparate nodes. You will sometimes see replication-based availability mechanisms referred to as RAIN, which stands for Redundant Arrays of Independent Nodes.

Figure 3: Lost a node? Can’t find it? Don’t worry! Replication will save the day!

Besides helping you to make sure that your services remain available, replication goes way beyond just allowing you with withstand the loss of a node, too. When replication is taken beyond the data center to other sites, you suddenly gain disaster recovery capability, too. In fact, in some hyperconverged systems that leverage inline deduplication across primary and secondary storage tiers, that’s exactly what happens. After deduplication, data is replicated to other nodes and to other data centers, forming the basis for incredibly efficient availability and disaster recovery.

How About Both – RAID and RAIN Combined

Let’s go a little deeper into the RAID/RAIN discussion with an eye on hyperconverged infrastructure solutions that provide both. First, there are some downsides to just RAIN-based replication (Replication Factor 2 or RF2). There are solutions on the market that provide RF2. Systems based on RF2 will lose data if any two nodes or disks in a cluster fail, or if even just one node should fail while any other node is down for maintenance.

To make things a bit more resilient, you could bump up to RF3, but this replication factor then requires a minimum of five nodes at each site that uses RF3 and imposes an additional 50% penalty on capacity. With RF3, you can also start to think about using erasure coding, but this requires RF3 and carries with it a lot of CPU overhead due to the way that erasure coding works. This may not be suitable when trying to support high-performance applications.

How about combining RAID and RAIN into a single solution? Maybe you combine the use of local RAID 6 on individual nodes so that any node can tolerate double disk failures and keep virtual machines up and running. With each individual node very well protected, the likelihood of losing an entire node is reduced. From there, you apply RAIN so that, in the event that a complete node is lost, you can tolerate that, too. The strategic combination of RAID and RAIN enables tolerance against a broad set of failure scenarios.

What is Erasure Coding?

Erasure coding is usually specified in an N+M format: 10+6, a common choice, means that data and erasure codes are spread over 16 (N+M) drives, and that any 10 of those can recover data. That means any six drives can fail. If the drives are on different appliances, the protection includes appliance failures, so six appliance boxes could go down without stopping operations.

Courtesy: http://www.networkcomputing.com/storage/raid-vs-erasure-coding/a/d-id/1297229

Backup and Recovery

Despite your best efforts, there will probably come a day when you need to recover data lost from production. Data losses can happen for a variety of reasons:

- Human error — People make mistakes. Users accidentally delete files. Administrators accidently delete virtual machines. IT pros can sometimes accidentally pull the wrong disk from a storage system or unplug the wrong server’s power cord.

- Hardware failure — When hardware fails, sometimes it fails spectacularly. In fact, hardware failure may not even be the result of failed IT hardware. You may end up in a situation, for example, in which the data center cooling systems fail and server automatically shuts down as the temperature rises. This could be considered a server hardware failure because of the outcome (the server going down), when in fact the server is actually doing exactly what it’s supposed to do in this case.

- Disasters — Hurricanes, tornados, floods, a new Terminator movie. Disasters come in all kinds of forms and can result in data loss.

The SimpliVity Story on Protecting Production Data and Availability

by Brian Knudston

Being a hyperconvergence platform, SimpliVity first provides the compute and storage infrastructure for customer’s production applications. As data is ingested from the hypervisor, we stage the VM data into DRAM on the OmniStack Accelerator Card across two of our nodes within a single datacenter. With data now protected across multiple nodes, in addition to supercapacitor and flash storage protecting the DRAM on each OmniStack Accelerator Card, we acknowledge a successful write back to the VM and process the data for deduplication, compression and optimization to permanent storage on the Hard Disk Drives (HDDs) on both nodes. Once this process is complete, every VM in a SimpliVity datacenter can survive the loss of at least two HDDs in every node, in a datacenter AND the loss of a full SimpliVity node.

Disaster Recovery

Disaster recovery takes backup one step further than the basics. Whereas backup and recovery are terms that generally refer to backing up data and, when something happens, recovering that data, disaster recovery instead focuses on recovery beyond just the data.

Disaster recovery demands that you think about the eventual needs by starting at the end and working backward. For example, if your data center is hit by an errant meteor (and assuming that this meteor has not also caused the extinction of the human race) recovering your data alone will be insufficient. You won’t have anything onto which to recover your data if your data center is obliterated.

Before we get too fatalistic, let’s understand what the word disaster really means in the context of the data center. It’s actually kind of an unfortunate term since it immediately brings to mind extinction-level events, but this is not always the case for disaster recovery.

There are really two kinds of disasters on which you need to focus:

- Micro-level disasters — These are the kinds of events that are relatively common, such as losing a server or portion of a data center. In general, you can quickly recover in the same data center and keep on processing. Often, recovery from these kinds of disasters can be achieved through backup and recovery tools. With that said, these events will probably still result in downtime.

- Macro-level disasters — These are the kind of life-altering events that keep IT pros awake at night and include things like fires, acts of {insert deity here}, or rampaging hippos. Recovery from these disasters will mean much more than just restoring data.

Business Continuity

Since disaster recovery is kind of a loaded term, a lot of people prefer to think about the disaster recovery process as “business continuity” instead. However, that’s not all that accurate. Business continuity is about all the aspects to a business continuing after a disaster. For example, where are the tellers going to report after the fire? How are the phone lines going to be routed? Disaster recovery is an IT plan that is a part of business continuity.

Thinking about the disaster recovery process with the end in mind requires that you think about what it would take to have everything back up and running — hardware, software, and data — before disaster strikes.

Yes, your existing backup and recovery tools probably play a big role in your disaster recovery plan, but that’s only the very beginning of the process.

Disaster recovery plans also need to include, at a bare minimum:

- Alternate physical locations — If your primary site is gone, you need to have other locations at which your people can work.

- Secondary data centers — In these locations, or in the cloud, you need to have data centers that can handle the designated workloads from the original site. This includes a space for the hardware, the hardware itself, and all of the software necessary to run the workloads.

- Ongoing replication — In some way, the data from your primary site needs to make its way to your secondary site. This is a process that needs to happen as often as possible in order to achieve desirable RTOs and RPOs. In an ideal world, you would have systems in place that can replicate data in minutes after it has been handled in the primary data center. The right hyperconverged infrastructure solution can help you to achieve these time goals.

- Post-disaster recovery processes — Getting a virtual machine back up and running is just the very first step in a disaster recovery process. RTO is a measure of more than just the restoration of the VM. From there, processes need to kick off that include all the steps required to get the application and data available to the end user. These processes include IP address changes, DNS updates, re-establishment of communication paths between parts of a n-tier application stack and other non-infrastructure items.

SimpliVity’s answer to full spectrum DR

by Brian Knudston

SimpliVity alone makes it simple for you to achieve the first part of disaster recovery, which is making sure that virtual machines are always available, even if a data center is lost. SimpliVity has made a focus over the last several months on providing integration into other tools that can help automate and orchestrate all of the remaining steps of the disaster recovery process, including pre-built packages of SimpliVity functionality within VMware’s vRealize Automation and Cisco’s UCS Director, and supporting partners in the development of tools on top of SimpliVity APIs like VM2020’s EZ-DR.

Data Reduction in the World of Data Protection

We’re going to be talking a lot about data reduction – deduplication and compression – in this book. They’re a huge part of the hyperconverged infrastructure value proposition and, when done right, can help IT to address problems far more comprehensively than when it’s done piecemeal.

When it comes to data protection, data reduction can be really important, especially if that data reduction survives across different time periods – production, backup, and disaster recovery. If that data can stay reduced and deduplicated, some very cool possibilities emerge. The sidebar below highlights one such solution.

The Data Virtualization Platform and Disaster Recovery

by Brian Knudston

To protect data at specific instances of time, SimpliVity designed backup and restoration operations directly into the DNA of the SimpliVity OmniStack Data Virtualization Platform, enabled by our ability to dedupe, compress and optimize all the VM data. This results in backups and restores that can be taken in seconds, which can help reduce Recovery Point Objectives (RPOs) and Recovery Time Objectives (RTOs), while consuming almost no IOPS off the HDDs.

When protecting data across datacenters, SimpliVity maintains awareness of data deduplication across the different sites. If a VM is configured to backup to a remote datacenter, the receiving datacenter determines which unique blocks need to be transported across the WAN and the sending datacenter only sends those unique blocks. (See the Lego Analogy post for more details.) This drastically reduces the WAN bandwidth necessary between sites, increasing the frequency of backups to remote sites and eliminate IOPS by reducing the amount of data that needs to be read from and written to the HDDs.

Fault Tolerance

Availability is very much a direct result of the kinds of fault tolerance features built into the infrastructure as a whole. Data center administrators have traditionally taken a lot of steps to achieve availability, with each step intended to reduce the risk of a fault in various areas of the infrastructure. These can include:

- Using RAID — As previously mentioned, RAID allows you to experience drive failures within a hyperconverged node and keep operating.

Simplified Storage Systems

Bear in mind that RAID, and storage in general, becomes far simpler to manage in a hyperconverged infrastructure scenario. There is no more SAN and, in most cases, RAID configuration is an “under the hood” element that you don’t need to worry about. This is one less component that you have to worry about in your data center.

- Redundant power supplies — Extra power supplies are, indeed, a part of your availability strategy, because they allow you to experience a fault with your power system and still keep servers operating.

- Multiple network adapters — Even network devices can fail, and when they do, communications between servers and users and between servers and other servers can be lost. Unless you have deployed multiple switches into your environment and multiple network adapters into your servers, you can’t survive a network fault. Network redundancy helps you make your environment resilient to network-related outages.

- Virtualization layer — The virtualization layer includes its own fault tolerance mechanisms, some of which are transparent and others require a quick reboot. For example, VMware’s High Availability (HA) service continuously monitors all of your vSphere hosts. If one fails, workloads are automatically restarted on another node. There is some downtime, but it’s minimal. In addition to HA, VMware makes available a Fault Tolerance (FT) feature. With FT, you actually run multiple virtual machines. One is the production system and the second is a live shadow VM that springs into action in the event that the production system becomes unavailable. However, with all that said, there are some limitations inherent in hypervisor-based fault tolerance technology, described in the sidebar entitled Fault Tolerance Improvements in vSphere 6. This is why some hyperconverged infrastructure vendors eschew hypervisor-based fault tolerance mechanisms in favor of building their own, more robust solutions.

Fault Tolerance Improvements in vSphere 6

Frankly, Fault Tolerance (FT) in vSphere has been all but useless, except for the smallest virtual machines. Here’s an excerpt from VMware’s documentation explaining the limitations of FT: “Only virtual machines with a single vCPU are compatible with Fault Tolerance.” This limitation is one of the many items that holds back FT from being truly usable across the board. vSphere 6 increases Fault Tolerance capabilities to virtual machines with up to 4 vCPUs. This is still a significant limitation when you consider than many VMs are deployed with 8 vCPUs or more, particularly for large workloads.

End Results: High Availability, Architectural Resiliency, Data Protection, and Disaster Recovery

No one wants downtime. It’s expensive and stressful. Most organizations would be thrilled if IT could guarantee that there would be no more downtime ever again. Of course, there is no way to absolutely guarantee that availability will always be 100%, but organizations do strive to hit 99% to 99.999% availability as much as possible.

High availability is really the result of a combination of capabilities in an environment. In order to enable a highly available application environment, you need to have individual nodes that can continue to work even if certain hardware components fail and you need to have a cluster that can continue to operate even if one of the member nodes bites it.

Hyperconverged infrastructure helps you to achieve your availability and data protection goals in a number of different ways. First, the linear scale-out nature of hyperconverged infrastructure (i.e., as you add nodes, you add all resources, including compute, storage, and RAM), means that you can withstand the loss of a node because data is replicated across multiple nodes with RAIN. Plus, for some hyperconverged solutions, internal use of RAID means that you can withstand the loss of a drive or two in a single node. With the combination of RAIN+RAID providing the most comprehensive disaster recovery capabilities, you can withstand the loss of an entire data center and keep on operating with little to no loss of data.

As you research hyperconverged infrastructure solutions, it’s important to make sure that you ask a lot of questions about how vendors provide availability and data protection in their products. The answers to these questions will make or break your purchase.

Summary

It’s clear that data protection and high availability are key components in any data center today. The cloud has become another way that companies can improve their availability and data protection systems.[/vc_column_text][/vc_column][/vc_row][vc_row][vc_column width=”1/1″][vc_separator type=”transparent” position=”center” thickness=”10px”][vc_column_text]

[button size=’small’ style=” text=’Download the Full “Gorilla Guide” eBook here!’ icon=’fa-play’ icon_color=” link=’https://www.hyperconverged.org/gorilla-guide/?partner=hc’ target=’_self’ color=” hover_color=” border_color=” hover_border_color=” background_color=” hover_background_color=” font_style=” font_weight=” text_align=” margin=”][/vc_column_text][/vc_column][/vc_row][vc_row][vc_column width=”1/1″][vc_column_text]

[/vc_column_text][/vc_column][/vc_row][vc_row][vc_column width=”1/2″][vc_column_text]

[icons size=” custom_size=” icon=’fa-arrow-circle-left’ type=’normal’ position=” border=’yes’ border_color=” icon_color=’#e04646′ background_color=” margin=” icon_animation=” icon_animation_delay=” link=’https://www.hyperconverged.org/blog/2016/10/06/addressing-dc-metric-pain/’ target=’_self’] Previous Post

[/vc_column_text][/vc_column][vc_column width=”1/2″][vc_column_text]

Next Post [icons size=” custom_size=” icon=’fa-arrow-circle-right’ type=’normal’ position=” border=’yes’ border_color=” icon_color=’#e04646′ background_color=” margin=” icon_animation=” icon_animation_delay=” link=’https://www.hyperconverged.org/blog/2016/10/06/hyperconvergence-public-cloud/’ target=’_self’]

[/vc_column_text][/vc_column][/vc_row][vc_row][vc_column width=”1/1″][vc_column_text]

[/vc_column_text][/vc_column][/vc_row]